Why Tesla's "Full Self-Driving Beta 9" is not safe at any speed | Opinion

Tesla's "full self-driving" has been hyped and endlessly promised, but is still in development.

Within a darkened teen's bedroom (what's wrong with an open window?), the Age of Empires IV beta could see Genghis Khan and his Mongol hordes endanger the lives of many Chinese peasant farmers.

But on US roads, beta testing the latest (9.0) version of Tesla's Full Self-Driving (FSD) feature could put real road users and pedestrians in mortal danger, in the experiment none of them agreed to be a part.

Yes, right now there are about 800 Tesla employees and about 100 Tesla owners using FSD 9 enabled vehicles in the US (a relatively small v9.1 update was released at the end of July), in 37 states (majority in California). feeding data back into Tesla's "neural networks" designed to learn from this experience and help improve Autopilot and FSD systems. A drop in America's vast automobile ocean, but enough to raise questions.

Autopilot is Tesla's existing driver assistance package based on adaptive cruise control, lane keeping assist, automatic lane change, and self-parking.

The name has generated heated debate, and while I understand that even in the context of a commercial aircraft, autopilot is not the “feet on the dashboard” free-of-hand (and mind) experience that helped Hollywood make it so, perception is everything. , and using that name is naive at best and reckless at worst.

Which makes the marketing of what is still SAE Level 2 "Advanced Driver Assistance System" (there are six levels) as "Full Self Driving" even more dubious.

FSD is based almost exclusively on cameras and microphones; Tesla recently phased out radar and never deploys the widely used "Light Detection and Ranging" (Lidar) remote sensing technology on the grounds that it's unnecessary.

In fact, at the Tesla Autonomy Day event in early 2019, CEO Elon Musk said that those using lidar in their quest for autonomous driving are doing a "stupid task."

Cynics might say that compact cameras are a great way to lower unit costs, but even if this approach is cheaper, the possible integration of a FLIR thermal imager could reinforce the current Achilles' heel of the camera-only approach...bad weather. Which brings us back to the development of the system on public roads.

Of course, the Tesla employees using FSD 9 have gone through an internal quality and testing program and the owners have been selected based on their outstanding driving performance, but they are not design engineers and they will not necessarily do the right thing. thing all the time.

These cars do not have any special systems that ensure the vigilance and attentiveness of the driver. And for the record, Argo AI, Cruise and Waymo are testing software updates at private closed facilities, with specially trained drivers monitoring vehicles.

One of the most important steps with FSD 9 is that the system can now (under the supervision of the driver) navigate through intersections and city streets.

Musk suggested that FSD drivers be "paranoid" in their approach, assuming that something could go wrong at any moment.

Watching respected Detroit engineer Sandy Munroe ride with Dirty Tesla's Chris (@DirtyTesla on social media, and the president of the Michigan Tesla Owners Club) in the latter's FSD 9-powered Model Y, illuminating.

Chris, an unabashed Tesla fan, confirms that “much remains to be done. He really makes a lot of mistakes."

He adds: “It's a lot freer than the public build of Autopilot, which seems to be stuck in its path. If he thinks he needs to move on the center line to get out of the path of the cyclist, he will. You have to be prepared for when he does it and when he doesn't have to."

Chris says that sometimes during a ride the system isn't "sure" of what it sees. “Definitely sometimes I take control when he gets too close to a wall, too close to some barrels or something like that,” he adds.

Speaking to Consumer Reports about FSD 9 testing, Celica Josiah Talbott, a professor at American University's School of Public Affairs in Washington, D.C. who studies autonomous vehicles, said FSD Beta 9-equipped Teslas in videos she's seen in action. "almost like a drunk driver" struggling to stay between lanes.

“It squirms to the left, it squirms to the right,” she says. "While its right-hand corners feel pretty solid, its left-hand corners are almost wild."

And it's not that these are problems with teething at an early stage. This is a technology that has been “almost ready” for a long time. Musk famously said that FSD will be "functionally complete" by the end of 2019. For years, Tesla has charged for over-promising but not delivering because it didn't deliver 100 percent.

The idea is that the Tesla you buy today supports FSD, and the over-the-air update will activate the functionality you pre-paid for as soon as it's ready.

In 2018, FSD was worth $3000 at the time of sale (or $4000 after purchase). The early 2019 drop to $2000 certainly thrilled those who were already coughing up, but the price has risen steadily as development continues.

"Autopilot" became standard while the FSD variant rose to $5000, then in mid-2019 when Elon Musk announced full self-driving "in 18 months" it rose to $6000, then to $7000. $8000 and up to $10,000. at the end of last year.

A couple of things here. According to Dirty Tesla's Chris, the FSD release notes reinforce the idea that "you always have to be careful, keep your hands on the wheel."

Even the SAE Level 3 standard (which is a huge step, and FSD 9 is not L3) says that "the driver must remain alert and ready to take control." Not autonomous. Not full self driving.

So what's the point? Tesla owners are testing a software product they have already paid for and should have received years ago. And the need for constant monitoring certainly makes the process more stressful and perhaps less safe, as the driver guesses the next action of the system.

In October 2019, Musk tweeted, “We will definitely have over a million robot taxis on the road next year. The fleet wakes up with an over-the-air update. That's all it takes."

The logic is that there are already many Teslas on the road (20 million is an exaggeration), and with Tesla's yet-to-be-released smartphone app, your FSD investment unlocks the potential of a valuable, income-generating, fully autonomous asset.

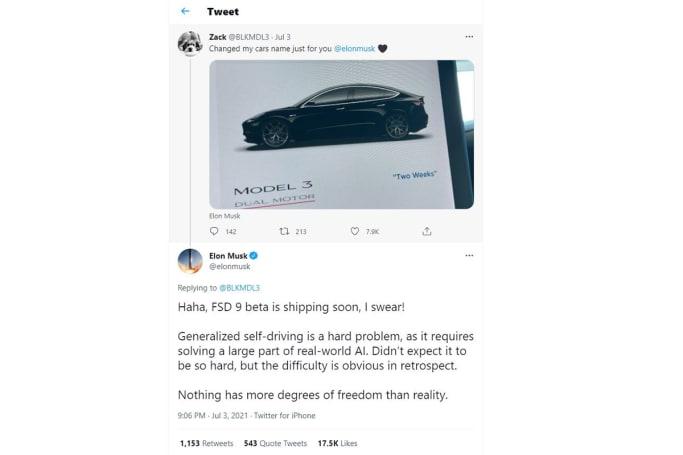

But in July of this year, Musk markedly changed his position, tweeting: “Generalized self-driving is a difficult problem, since it requires solving a significant part of real AI. I did not expect it to be so difficult, but in retrospect, the difficulties are obvious. Nothing has more degrees of freedom than reality."

Maybe this is a case of better late than never, because no matter how it is tested, a Level 5 autonomous Tesla that will deliver on the promise of "full autonomous driving" in the near future is just as likely as easy. dust of fresh powder. snow on Uluru.

And how long future Tesla owners will wait for the FSD they paid for, in some cases years ago, and how satisfied they will be when (if?) it finally arrives, it will be interesting to watch.